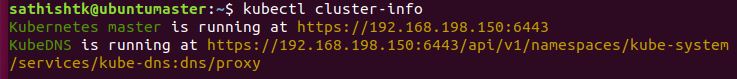

So, you have your test Kubernetes cluster up and running and you are trying to find out the status of your cluster by typing these commands after a fresh reboot

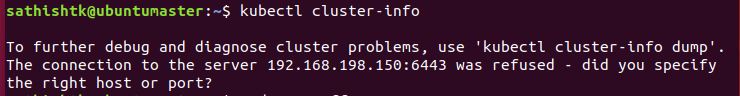

kubectl cluster-info

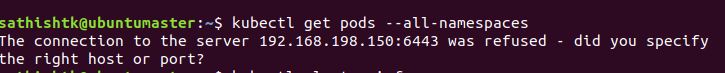

You start getting a connection error, so you try out the next command to get the status of your pods

kubectl get pods --all -namespaces

Same error. So, majority of times the fix is disabling all swaps

sudo swapoff -aNote, we are talking about test cluster here. In a production cluster, interact with your Linux sys admin to under the impact of these changes. Finally, when you issue the command for your cluster-info, you should get this message